Overview

Scientists and engineers leading missions like NASA’s Artemis program and future Mars expeditions, along with planetary researchers studying our solar system’s evolution, rely heavily on high-resolution imaging to plan missions and study planetary bodies and moons.

However, the orbital cameras capturing these images operate in extreme environments, and challenges like extremely low-light illumination over permanently shadowed regions on the Moon and the ageing and degradation of sensors over Mars hinder the acquisition of clear images.

Our goal is to accelerate discoveries in planetary science by designing state-of-the-art scientific machine learning-based image processing tools that can overcome these challenges. We are leveraging vast amounts of planetary data to train and validate these tools.

Research highlights

Illuminating the Moon’s permanently shadowed regions

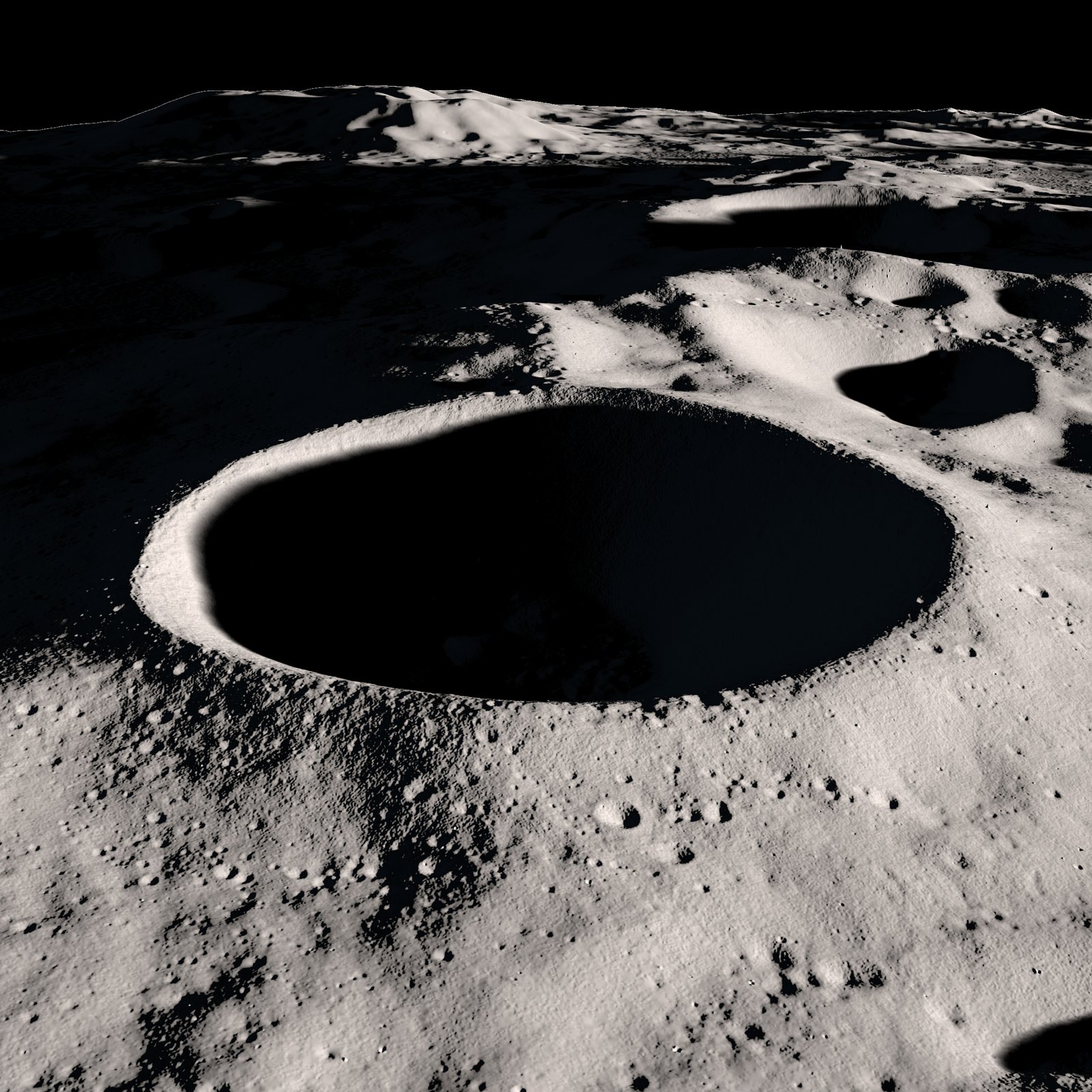

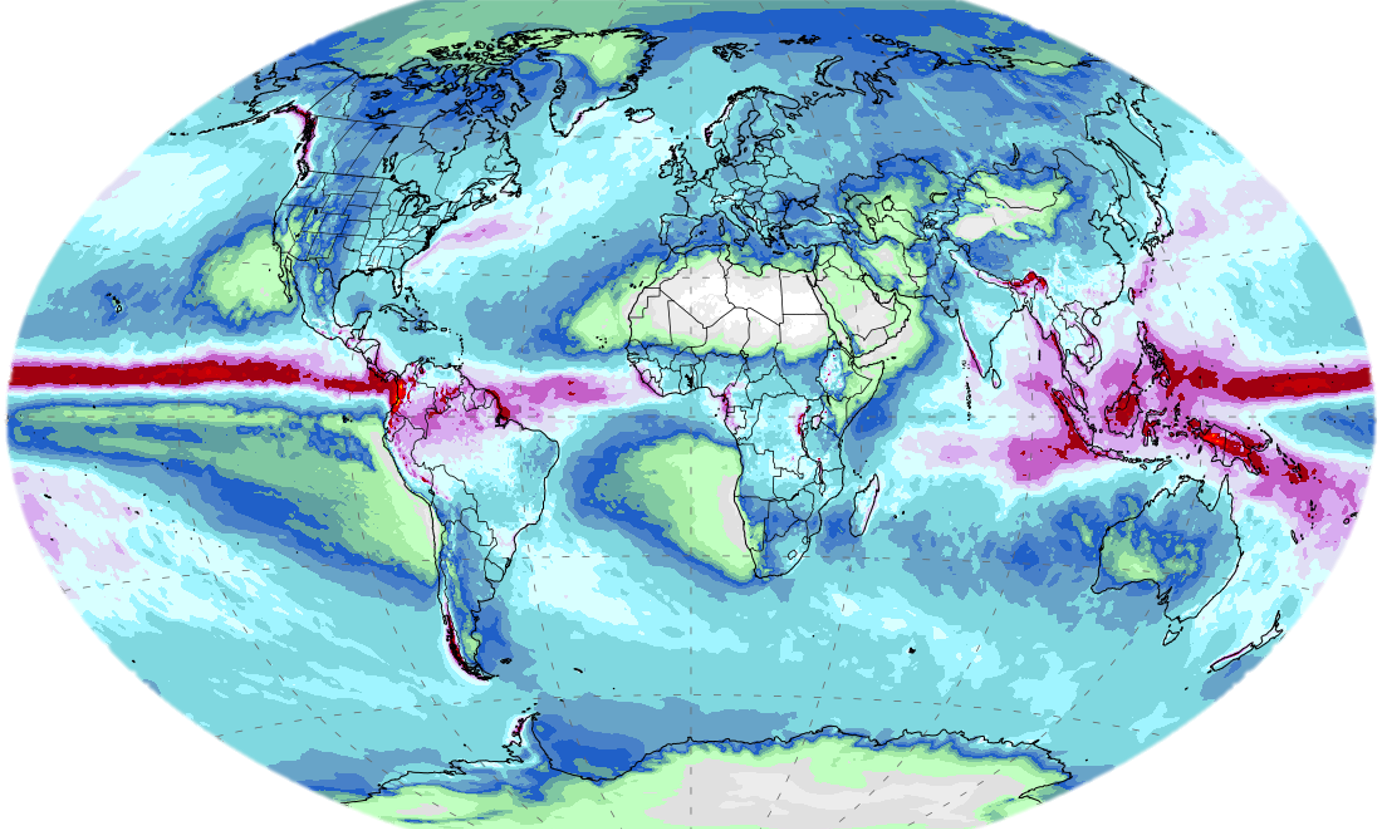

Permanently shadowed regions (PSRs) on the Moon, such as craters and other topographic depressions, are of significant interest to mission planners and planetary scientists because they are thought to contain water ice (see feature image), making them key to sustaining human presence in the future. However, PSRs never see direct sunlight, and are only illuminated by extremely low levels of secondary scattered light from their surroundings. This makes them extremely challenging to image (Fig. 1).

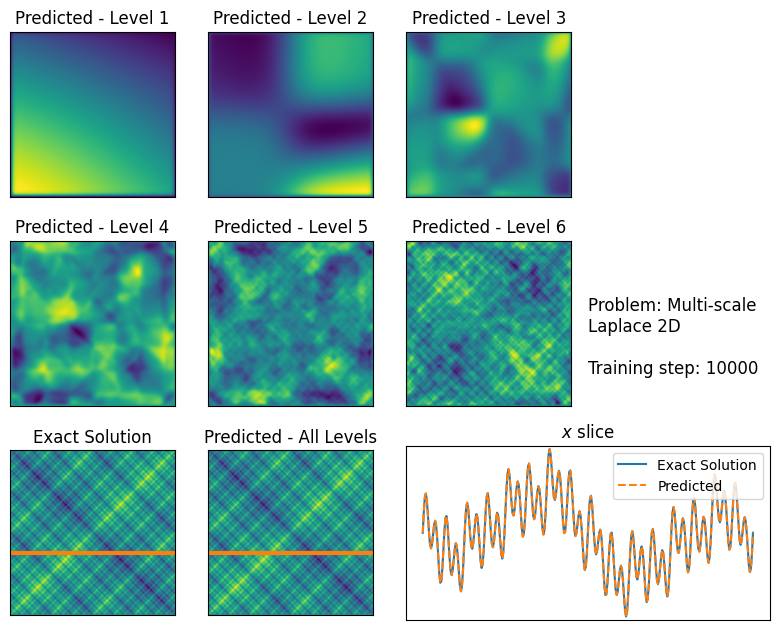

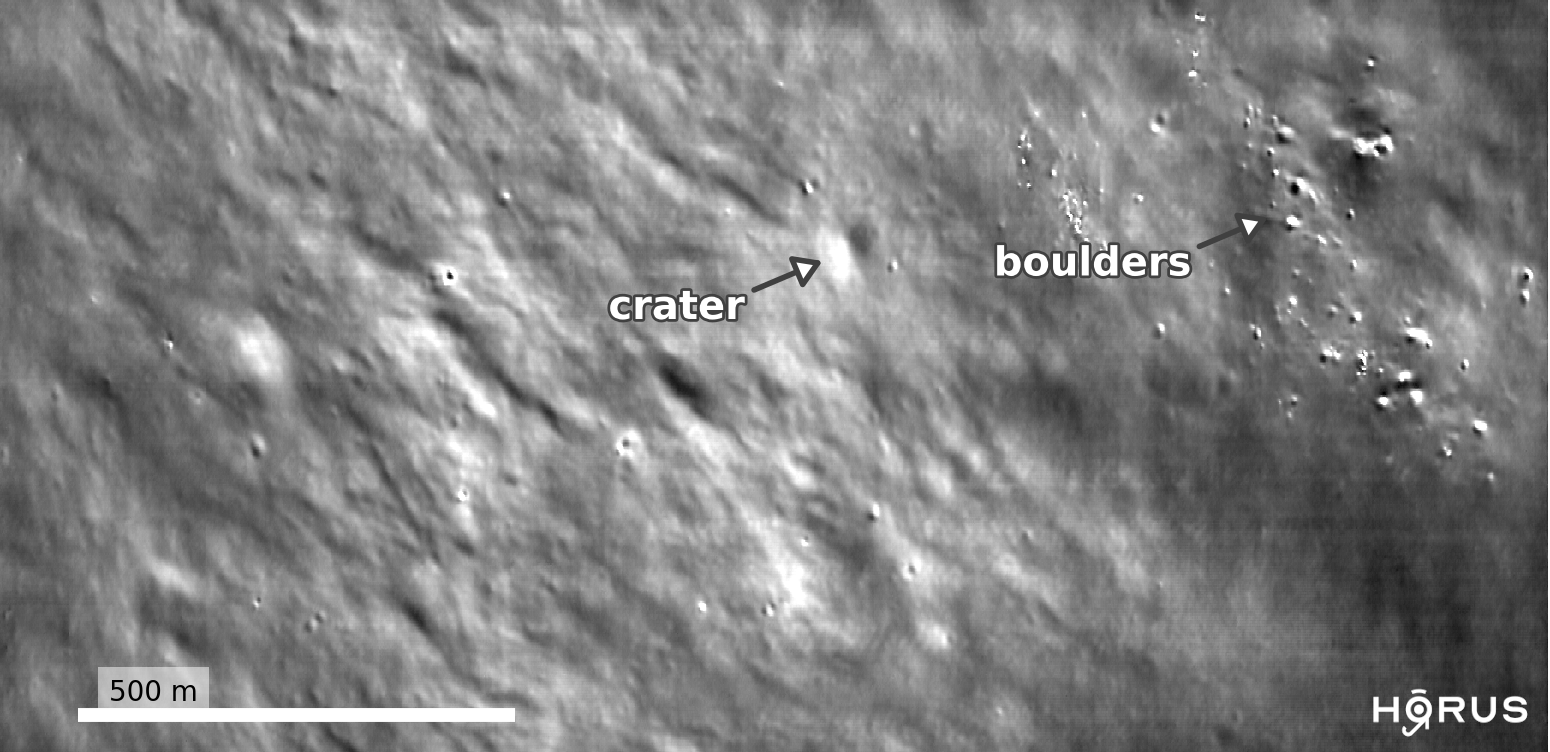

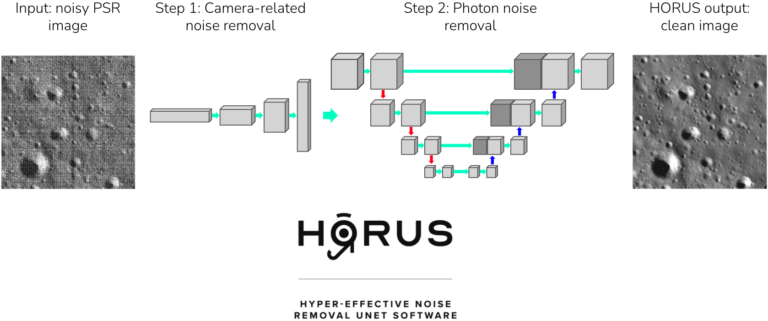

We developed deep learning techniques to enhance low-light images of PSRs captured by the Lunar Reconnaissance Orbiter’s Narrow Angle Camera (LRO NAC). Our approach, called HORUS, or Hyper Effective nOise Removal U-Net Software, uses a physical noise model and real calibration measurements to accurately model and remove noise in these images. HORUS is able to reveal geological features like boulders and craters inside PSRs as small as 3 meters for the first time (Fig. 2), enabling mission planners to plan traverses into PSRs and scientists to better understand these extreme environments.

Restoring Mars imagery

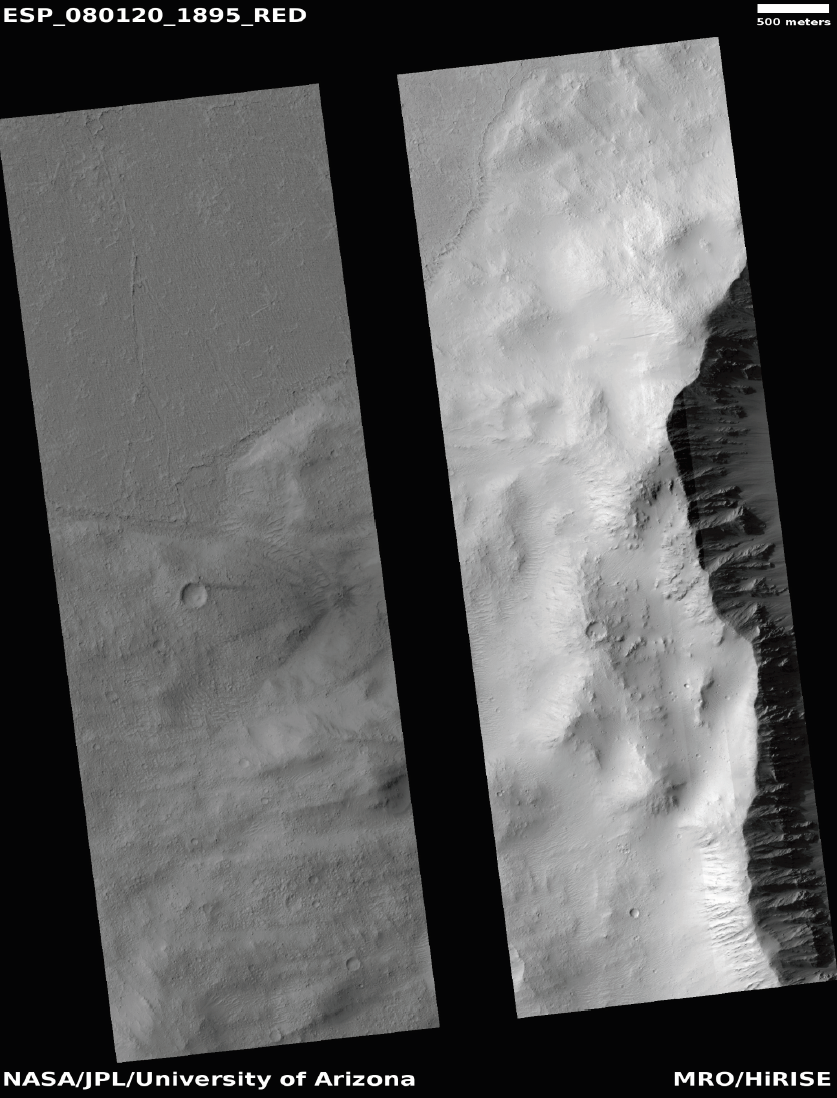

Meanwhile, the High Resolution Imaging Science Experiment (HiRISE) is a camera aboard the Mars Reconnaissance Orbiter which has played an essential part in understanding the Martian surface since it was launched in 2005. Unfortunately, in July 2023 some central pixels in the images permanently malfunctioned, resulting in a large missing data gap (Fig. 3). We are currently developing SciML-based methods to reconstruct these missing pixels based on their surrounding pixels and other wavelength bands, restoring the integrity of the images. This restoration is crucial for accurate terrain analysis and mission planning on Mars.

Applications and impact

Our PSR imaging denoising technology was used by NASA to plan safer traverses for their upcoming VIPER mission, which will drive a rover directly into a PSR to search for water, and help NASA select potential landing sites for the Artemis program. We are currently working with the HiRISE Mars imagery team to deploy our pixel reconstruction methods into their production pipelines.

The long term goal of this project is to enable mission planners and planetary scientists to make scientific discoveries by developing state-of-the-art SciML tools for interpreting and analysing planetary images.

Future directions

Our future work includes:

- Developing general-purpose foundation models that are capable across image processing tasks and planetary datasets.

- Fusing multiple sensor measurements, such as optical, laser and thermal sensors, into our models to improve image processing, interpretability and scientific discovery.

- Deploying our SciML tools into production pipelines by working with domain scientists and mission planners.

Contact / get involved

Please contact us if you are interested in contributing / learning more about this project.

Publications

Bickel, V. T., Moseley, B., et al. “Peering into lunar permanently shadowed regions with deep learning.” Nature Communications (2021).

Moseley, B., et al. “Extreme Low-Light Environment-Driven Image Denoising over Permanently Shadowed Lunar Regions with a Physical Noise Model.” IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2021).

Bickel, V., Moseley, B., Hauber, E., Shirley, M., Williams, J.-P., & Kring, D. A. “Cryogeomorphic Characterization of Shadowed Regions in the Artemis Exploration Zone.” Geophysical Research Letters (2022).

Resources

Team & collaborators

- Ben Moseley: Imperial College London.

- Valentin Bickel: University of Bern.

- Ignacio Lopez-Francos: NASA.

- Loveneesh Rana: NASA FDL.

- Mark Shirley: NASA Ames Research Center.

- Ardan Suphi: Imperial College London.

- Gareth Collins: Imperial College London.

The initial results on PSR denoising were produced during NASA’s 2020 Frontier Development Lab (FDL). We would like to thank the FDL and its partners (Luxembourg Space Agency, Google Cloud, Intel AI, NVIDIA and the SETI Institute) for their support.